How to install a Squid & Dansguardian content filter on Ubuntu Server

Being a family man and a geek, our household has both children and lots of tech; there are 6 or so computers, various tablets, smartphones and other devices capable of connecting to, and displaying content from, the Internet.

For a while now I’ve wanted to provide a degree of content filtering on our network to prevent accidental, or deliberate, access to some of the worst things the Internet has to offer. What I didn’t want to do however was blindly hand control of this very important job to my ISP (as our beloved leader would like us all to do). Also, I absolutely believe this is one of my responsibilities as a parent; it is not anyone else’s. In addition, there are several problems I have with our government’s chosen approach:

- Filtering at the ISP network-side means the ISP must try and inspect all my internet traffic all of the time (what else could they potentially do with this information I wonder?)

- If the ISP’s filter prevents access to content which we feel that our kids should be able access, how can I change that? Essentially I can’t.

- I reckon that most kids of mid-teenage years will have worked out ways to bypass these filters anyway (see footnote) leaving more naive parents in blissful ignorance; thinking their kids are protected when in fact they are not.

With the above in mind I set about thinking how I could provide a degree of security on our home network using tried and trusted Open Source tools…

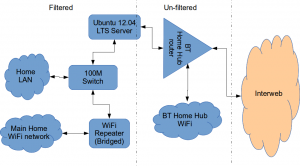

Firstly this is how our networked looked before.

The BT Router is providing the DHCP service in the above diagram.

The Ubuntu 12.04 Server is called vimes (after Commander Vimes in the Discworld novels by Terry Pratchett) and is still running the same hardware that I described way back in 2007! It’s a low power VIA C7 processor, 1G of RAM and it now has a couple of Terabytes of disk. It’s mainly used as a central backup controller and dlna media store/server for the house.

I never did get Untangle working on it, but now it seemed like a good device to use to do some filtering… There are loads of instructions on the Internet about using Squid & Dansguardian but none covered quite what I wanted to achieve: A dhcp serving, bridging, transparent proxy content filter.

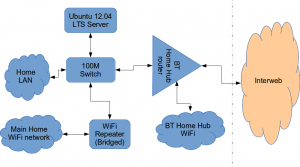

Architecturally, my network needed to look like this:

As you can see above, the physical change is rather negligible. The Ubuntu server now sits between the home LAN and the broadband router rather than as just another network node on the LAN as it was before.

The configuration of the server to provide what I required can be broken down into several steps.

1. Get the Ubuntu server acting as a transparent bridge

This is relatively straightforward. First install the bridge-utils package: sudo apt-get install bridge-utils

Then I made a backup of my /etc/network/interfaces file and replaced it with this one:

# This file describes the network interfaces available on your system # and how to activate them. For more information, see interfaces(5). # The loopback network interface auto lo iface lo inet loopback # Set up interfaces iface eth0 inet manual iface eth1 inet manual # Bridge setup auto br0 iface br0 inet static bridge_ports eth0 eth1 address 192.168.1.2 broadcast 192.168.1.255 netmask 255.255.255.0 gateway 192.168.1.1

Probably the most interesting part of this file is where we assign a static IP address to the bridge itself. Without this I would not be able to connect to this server as both ethernet ports are now just transparent bridge ports so not actually listening for IP traffic at all.

(Obviously you will need to determine the correct IP address scheme for your own network)

2. Disable DHCP on the router and let Ubuntu do it instead

The reason for this is mostly down to the BT Home Hub… For some bizarre reason, BT determined that they should control what DNS servers you can use. Although I’m not using it right now, I might choose to use OpenDNS for example, but I can’t change the DNS addresses served by the BT Home Hub router so the only way I can control this is to turn off DHCP on the router altogether and do it myself.

Install the dhcp server: sudo apt-get install dhcp3-server

Tell the dhcp server to listen for requests on the bridge port we created before by editing the file /etc/default/isc-dchp-server so that the INTERFACES line reads: INTERFACES="br0".

Then edit the dhcp configuration file /etc/dhcp/dhcpd.conf so we allocate the IP addresses we want to our network devices. This is how mine looks:

ddns-update-style none;

default-lease-time 600;

max-lease-time 7200;

# If this DHCP server is the official DHCP server for the local

# network, the authoritative directive should be uncommented.

authoritative;

# Use this to send dhcp log messages to a different log file (you also

# have to hack syslog.conf to complete the redirection).

log-facility local7;

subnet 192.168.1.0 netmask 255.255.255.0 {

range 192.168.1.16 192.168.1.254;

option subnet-mask 255.255.255.0;

option routers 192.168.1.1;

#Google DNS

option domain-name-servers 8.8.8.8, 8.8.4.4;

#OpenDNS

#option domain-name-servers 208.67.222.222, 208.67.220.220;

option broadcast-address 192.168.1.255;

}

There are many options and choices to make regarding setting up your DHCP server. It is extremely flexible; you will probably need to consult the man pages and other on-line resources to determine what is best for you. Mine is very simple. It serves one block of IP addresses within the range 192.168.1.16 to 192.168.1.254 to all devices. Currently I’m using Google’s DNS servers but as you can see I’ve also added OpenDNS as a comment so I can try it later if I want to.

3. Install Squid and get it working as a transparent proxy using IPTables

This bit took a while to get right but, as with most things it seems to me, in the end the actual configuration is fairly straightforward.

Install Squid: sudo apt-get install squid.

Edit the Squid configuration file /etc/squid3/squid.conf… By default this file contains a lot of settings. I made a backup and then reduced it to just those lines that needed changing so it looked like this:

http_port 3128 transparent

acl localnet src 192.168.1.0/24

acl localhost src 127.0.0.1/255.255.255.255

acl CONNECT method CONNECThttp_access allow localnet

http_access allow localhost

always_direct allow allcache_dir aufs /var/spool/squid3 50000 16 256

Probably the most interesting part in the above is the word “transparent” after the proxy port. Essentially this means we do not have to configure every browser on our network: http://en.wikipedia.org/wiki/Proxy_server#Transparent_proxy. The final line of the file is just some instructions to configure where the cache is stored and how big it is. Again, there are tons of options available which the reader will need to find out for themselves…

To actually cause all the traffic on our LAN to go through the proxy rather than just passing through the bridge transparently requires a bit of configuration on the server using ebtables to allow easier configuration of the Linux kernel’s bridge & iptables to redirect particular TCP/IP ports to the proxy.

First I installed ebtables: sudo apt-get install ebtables

My very simplistic understanding of the following command is that it essentially tells the bridge to identify IP traffic for port 80 (http) and pass this up to the kernel’s IP stack for further processing (routing) which we then use iptables to handle.

sudo ebtables -t broute -A BROUTING -p IPv4 --ip-protocol 6 --ip-destination-port 80 -j redirect --redirect-target ACCEPT

Then we tell iptables to forward all port 80 traffic from the bridge to our proxy:

sudo iptables -t nat -A PREROUTING -i br0 -p tcp --dport 80 -j REDIRECT --to-port 3128

Restart Squid: sudo service squid3 restart

At this point http browser traffic should now be passing through your bridge and squid proxy before going on to the router and Internet. You can test to see if it is working by tailing the squid access.log file.

I found that squid seemed to be very slow at this juncture. So I resorted to some google fu and looked for some help on tuning the performance of the system. I came across this post and decided to try the configuration suggestions by adding the following lines to my squid.conf file:

#Performance Tuning Options hosts_file /etc/hosts dns_nameservers 8.8.8.8 8.8.4.4 cache_replacement_policy heap LFUDA cache_swap_low 90 cache_swap_high 95 cache_mem 200MB logfile_rotate 10 memory_pools off maximum_object_size 50 MB maximum_object_size_in_memory 50 KB quick_abort_min 0 KB quick_abort_max 0 KB log_icp_queries off client_db off buffered_logs on half_closed_clients off log_fqdn off

This made an immediate and noticeable difference to the performance; enough so in fact that I haven’t yet bothered to go any further with tuning investigations. Thanks to the author Tony at last.fm for the suggestions.

4. Install Dansguardian and get it filtering content

sudo apt-get install dansguardian is all you need to install the application.

To get it to work with our proxy I needed to make a couple of changes to the configuration file /etc/dansguardian/dansguardian.conf.

First, remove or comment out the line at the top that reads UNCONFIGURED - Please remove this line after configuration I just prefixed it with a #.

Next we need to configure the ports by changing two lines so they look like this:

filterport = 8080

proxyport = 3128

Finally, and I think this is right, we need to set it so that Dansguardian and squid are both running as the same user so edit these two lines:

daemonuser = ‘proxy’

daemongroup = ‘proxy’

As you will see in that file, there are loads of other configuration options for Dansguardian and I will leave it up to the reader to investigate these at their leisure.

One suggestion I came across on my wanderings around the Interwebs was to grab a copy of one of the large collections of blacklisted sites records and install these into /etc/dansguardian/blacklists/. I used the one linked to from the Dansguardian website here http://urlblacklist.com/ which says it is OK to download once for free. As I understand it, having a list of blacklist sites will reduce the need for Dansguardian to parse every url or all content but this shouldn’t be relied on as the only mechanism as obviously the blacklist will get out-of-date pretty quickly.

Dansguardian has configurable lists of “phrases” and “weights” that you can tailor to suit your needs.

Now that’s installed we need to go back and reconfigure one of the iptables rules so that traffic is routed to Dansguardian rather than straight to Squid first and also enable communication between Squid and Dansguardian. You can flush (empty) the existing iptables rules by running iptables -F.

Now re-enter the rules as follows:

sudo iptables -t nat -A PREROUTING -i br0 -p tcp –dport 80 -j REDIRECT –to-port 8080

sudo iptables -t nat -A OUTPUT -p tcp –dport 80 -m owner –uid-owner proxy -j ACCEPT

sudo iptables -t nat -A OUTPUT -p tcp –dport 3128 -m owner –uid-owner proxy -j ACCEPT

sudo iptables -t nat -A OUTPUT -p tcp –dport 3128 -j REDIRECT –to-ports 8080

Restart Squid and Dansguardian: sudo service squid3 restart & sudo service dansguardian restart.

Now if you try to connect to the internet from behind the server your requests should be passed through Dansguardian and Squid automatically. If you try and visit something that is inappropriate your request should be blocked.

If it all seems to be working OK then I suggest making your ebtables and iptables rules permanent so they are restored after a reboot.

This can be achieved easily for iptables by simply running sudo iptables-save.

I followed these very helpful instructions to achieve a similar thing for the ebtables rule.

And that’s it. Try rebooting the server to make sure that it all still works without you having to re-configure everything. Then ask your kids and wife to let you know if things that they want to get to are being blocked. YOU now have the ability to control this – not your ISP… 😀

Footnotes

Be aware that on the network diagrams above the Wifi service provided by the BT Homehub router, and the LAN on the router side of the server, are not protected by these instructions. For me this is fine as the coverage of that Wifi network only makes it as far as the Kitchen anyway. And if it was more visible I could always change the key and only let my wife and I have access.

Also, I should make it clear that I know what I have above is not foolproof. I am completely aware that filtering/monitoring encrypted traffic is virtually impossible and there are plenty of services available that provide ways to circumvent what I have here. But I am also not naive and I reckon that if my kids have understood enough about networking and protocols etc. to be able to use tunnelling proxies or VPN services then they are probably mature enough to decide for themselves what they want to look at.

Of course there are plenty of additional mechanisms one can put in place if desired.

- Time-based filters preventing any Internet access at all at certain times

- Confiscation of Internet connected devices at bedtime

- Placing computers and gaming consoles in public rooms of the house and not in bedrooms

- And many more I’m sure you can think of yourself

As I see it, the point is simply this: As a parent, this is your responsibility…

How to install OpenERP 6.0 on Ubuntu 10.04 LTS Server (Part 1)

Update: 22/02/2012. OpenERP 6.1 was released today. I’ve written a howto for this new version here.

![]() Recently at work, we’ve been setting up several new instances of OpenERP for customers. Our server operating system of choice is Ubuntu 10.04 LTS.

Recently at work, we’ve been setting up several new instances of OpenERP for customers. Our server operating system of choice is Ubuntu 10.04 LTS.

Installing OpenERP isn’t really that hard, but having seen several other “How Tos” on-line describing various methods where none seemed to do the whole thing in what I consider to be “the right way”, I thought I’d explain how we do it. There are a few forum posts that I’ve come across where the advice is just plain wrong too, so do be careful.

As we tend to host OpenERP on servers that are connected to the big wide Internet, our objective is to end up with a system that is:

- A: Accessible only via encrypted (SSL) services from the GTK client, Web browser, WebDAV and CalDAV

- B: Readily upgradeable and customisable

One of my friends said to me recently, “surely it’s just sudo apt-get install openerp-server isn’t it?” Fair enough; this would actually work. But there are several problems I have with using a packaged implementation in this instance:

- Out-of-date. The latest packaged version I could see, in either the Ubuntu or Debian repositories, was 5.0.15. OpenERP is now at 6.0.3 and is a major upgrade from the 5.x series.

- Lack of control. Being a business application, with many configuration choices, it can be harder to tweak your way when the packager determined that one particular way was the “true path”.

- Upgrades and patches. Knowing how, where and why your OpenERP instance is installed the way it is, means you can decide when and how to update it and patch it, or add custom modifications.

So although the way I’m installing OpenERP below is manual, it gives us a much more fine-grained level of control. Without further ado then here is my way as it stands currently (“currently” because you can almost always improve things. HINT: suggestions for improvement gratefully accepted).

[Update 18/08/2011: I’ve updated this post for the new 6.0.3 release of OpenERP]

Step 1. Build your server

I install just the bare minimum from the install routine (you can install the openssh-server during the install procedure or install subsequently depending on your preference).

After the server has restarted for the first time I install the openssh-server package (so we can connect to it remotely) and denyhosts to add a degree of brute-force attack protection. There are other protection applications available: I’m not saying this one is the best, but it’s one that works and is easy to configure and manage. If you don’t already, it’s also worth looking at setting up key-based ssh access, rather than relying on passwords. This can also help to limit the potential of brute-force attacks. [NB: This isn’t a How To on securing your server…]

sudo apt-get install openssh-server denyhosts

Now make sure you are running all the latest patches by doing an update:

sudo apt-get update

sudo apt-get dist-upgrade

Although not always essential it’s probably a good idea to reboot your server now and make sure it all comes back up and you can still login via ssh.

Now we’re ready to start the OpenERP install.

Step 2. Create the OpenERP user that will own and run the application

sudo adduser --system --home=/opt/openerp --group openerp

This is a “system” user. It is there to own and run the application, it isn’t supposed to be a person type user with a login etc. In Ubuntu, a system user gets a UID below 1000, has no shell (well it’s actually /bin/false) and has logins disabled. Note that I’ve specified a “home” of /opt/openerp, this is where the OpenERP server, and optional web client, code will reside and is created automatically by the command above. The location of the server code is your choice of course, but be aware that some of the instructions and configuration files below may need to be altered if you decide to install to a different location.

Step 3. Install and configure the database server, PostgreSQL

sudo apt-get install postgresql

Then configure the OpenERP user on postgres:

First change to the postgres user so we have the necessary privileges to configure the database.

sudo su - postgres

Now create a new database user. This is so OpenERP has access rights to connect to PostgreSQL and to create and drop databases. Remember what your choice of password is here; you will need it later on:

createuser --createdb --username postgres --no-createrole --no-superuser --pwprompt openerp

Enter password for new role: ********

Enter it again: ********

[Update 18/08/2011: I have added the --no-superuser switch. There is no need for the openerp database user to have superuser privileges.]

Finally exit from the postgres user account:

exit

Step 4. Install the necessary Python libraries for the server

sudo apt-get install python python-psycopg2 python-reportlab \

python-egenix-mxdatetime python-tz python-pychart python-mako \

python-pydot python-lxml python-vobject python-yaml python-dateutil \

python-pychart python-webdav

And if you plan to use the Web client install the following:

sudo apt-get install python-cherrypy3 python-formencode python-pybabel \

python-simplejson python-pyparsing

Step 5. Install the OpenERP server, and optional web client, code

I tend to use wget for this sort of thing and I download the files to my home directory.

Make sure you get the latest version of the application files. At the time of writing this it’s 6.0.2 6.0.3; I got the download links from their download page.

wget http://www.openerp.com/download/stable/source/openerp-server-6.0.3.tar.gz

And if you want the web client:

wget http://www.openerp.com/download/stable/source/openerp-web-6.0.3.tar.gz

Now install the code where we need it: cd to the /opt/openerp/ directory and extract the tarball(s) there.

cd /opt/openerp

sudo tar xvf ~/openerp-server-6.0.3.tar.gz

sudo tar xvf ~/openerp-web-6.0.3.tar.gz

Next we need to change the ownership of all the the files to the openerp user and group.

sudo chown -R openerp: *

And finally, the way I have done this is to copy the server and web client directories to something with a simpler name so that the configuration files and boot scripts don’t need constant editing (I call them, rather unimaginatively, server and web). I started out using a symlink solution, but I found that when it comes to upgrading, it seems to make more sense to me to just keep a copy of the files in place and then overwrite them with the new code. This way you keep any custom or user-installed modules and reports etc. all in the right place.

sudo cp -a openerp-server-6.0.3 server

sudo cp -a openerp-web-6.0.3 web

As an example, should OpenERP 6.0.4 come out next, I can extract the tarballs into /opt/openerp/ as above. I can do any testing I need, then repeat the copy command (replacing 6.0.3 obviously) so that the modified files will overwrite as needed and any custom modules, report templates and such will be retained. Once satisfied the upgrade is stable, the older 6.0.3 directories can be removed if wanted.

That’s the OpenERP server and web client software installed. The last steps to a working system are to set up the two (server and web client) configuration files and associated init scripts so it all starts and stops automatically when the server boots and shuts down.

Step 6. Configuring the OpenERP application

The default configuration file for the server (in /opt/openerp/server/doc/) could really do with laying out a little better and a few more comments in my opinion. I’ve started to tidy up this config file a bit and here is a link to the one I’m using at the moment (with the obvious bits changed). You need to copy or paste the contents of this file into /etc/ and call the file openerp-server.conf. Then you should secure it by changing ownership and access as follows:

sudo chown openerp:root /etc/openerp-server.conf

sudo chmod 640 /etc/openerp-server.conf

The above commands make the file owned and writeable only by the openerp user and only readable by openerp and root.

To allow the OpenERP server to run initially, you should only need to change one line in this file. Toward to the top of the file change the line db_password = ******** to have the same password you used way back in step 3. Use your favourite text editor here. I tend to use nano, e.g. sudo nano /etc/openerp-server.conf

Once the config file is edited, you can start the server if you like just to check if it actually runs.

/opt/openerp/server/bin/openerp-server.py --config=/etc/openerp-server.conf

It won’t really work just yet as it isn’t running as the openerp user. It’s running as your normal user so it won’t be able to talk to the PostgreSQL database. Just type CTL+C to stop the server.

Step 7. Installing the boot script

For the final step we need to install a script which will be used to start-up and shut down the server automatically and also run the application as the correct user. Here’s a link to the one I’m using currently.

Similar to the config file, you need to either copy it or paste the contents of this script to a file in /etc/init.d/ and call it openerp-server. Once it is in the right place you will need to make it executable and owned by root:

sudo chmod 755 /etc/init.d/openerp-server

sudo chown root: /etc/init.d/openerp-server

In the config file there’s an entry for the server’s log file. We need to create that directory first so that the server has somewhere to log to and also we must make it writeable by the openerp user:

sudo mkdir /var/log/openerp

sudo chown openerp:root /var/log/openerp

Step 8. Testing the server

To start the OpenERP server type:

sudo /etc/init.d/openerp-server start

You should now be able to view the logfile and see that the server has started.

less /var/log/openerp/openerp-server.log

If there are any problems starting the server now you need to go back and check. There’s really no point ploughing on if the server doesn’t start…

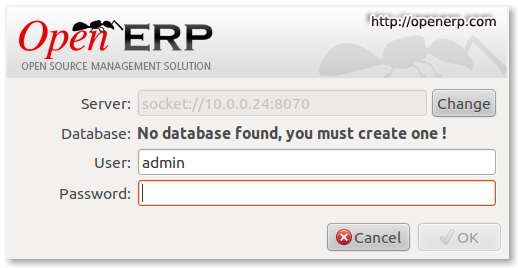

OpenERP - First Login

If you now start up the GTK client and point it at your new server you should see a message like this:

Which is a good thing. It means the server is accepting connections and you do not have a database configured yet. I will leave configuring and setting up OpenERP as an exercise for the reader. This is a how to for installing the server. Not a how to on using and configuring OpenERP itself…

What I do recommend you do at this point is to change the super admin password to something nice and strong. By default it is “admin” and with that a user can create, backup, restore and drop databases (in the GTK client, go to the file menu and choose the Databases -> Administrator Password option to change it). This password is written as plain text into the /etc/openerp-server.conf file. Hence why we restricted access to just openerp and root.

One rather strange thing I’ve just realised is that when you change the super admin password and save it, OpenERP completely re-writes the config file. It removes all comments and scatters the configuration entries randomly throughout the file. I’m not sure as of now if this is by design or not.

Now it’s time to make sure the server stops properly too:

sudo /etc/init.d/openerp-server stop

Check the logfile again to make sure it has stopped and/or look at your server’s process list.

Step 9. Automating OpenERP startup and shutdown

If everything above seems to be working OK, the final step is make the script start and stop automatically with the Ubuntu Server. To do this type:

sudo update-rc.d openerp-server defaults

You can now try rebooting you server if you like. OpenERP should be running by the time you log back in.

If you type ps aux | grep openerp you should see a line similar to this:

openerp 708 3.8 5.8 181716 29668 ? Sl 21:05 0:00 python /opt/openerp/server/bin/openerp-server.py -c /etc/openerp-server.conf

Which shows that the server is running. And of course you can check the logfile or use the GTK client too.

Step 10. Configure and automate the Web Client

Although it’s called the web client, it’s really another server-type application which [ahem] serves OpenERP to users via a web browser instead of the GTK desktop client.

If you want to use the web client too, it’s basically just a repeat of steps 6, 7, 8 and 9.

The default configuration file for the web client (can also be found in /opt/openerp/web/doc/openerp-web.cfg) is laid out more nicely than the server one and should work as is when both the server and web client are installed on the same machine as we are doing here. I have changed one line to turn on error logging and point the file at our /var/log/openerp/ directory. For our installation, the file should reside in /etc/, be called openerp-web.conf and have it’s owner and access rights set as with the server configuration file:

sudo chown openerp:root /etc/openerp-web.conf

sudo chmod 640 /etc/openerp-web.conf

Here is a web client boot script. This needs to go into /etc/init.d/, be called openerp-web and be owned by root and executable.

sudo chmod 755 /etc/init.d/openerp-web

sudo chown root: /etc/init.d/openerp-web

You should now be able to start the web server by entering the following command:

sudo /etc/init.d/openerp-web start

Check the web client is running by looking in the log file, looking at the process log and, of course, connecting to your OpenERP server with a web browser. The web client by default runs on port 8080 so the URL to use is something like this: http://my-ip-or-domain:8080

Make sure the web client stops properly:

sudo /etc/init.d/openerp-web stop

And then configure it to start and stop automatically.

sudo update-rc.d openerp-web defaults

You should now be able to reboot your server and have the OpenERP server and web client start and stop automatically.

I think that will do for this post. It’s long enough as it is!

I’ll do a part 2 in a little while where I’ll cover using apache, ssl and mod_proxy to provide encrypted access to all services.

[UPDATE: Part 2 is here]

Chandler Calendar Server (Cosmo) 1.0 Released

Now here’s a great OSS tool that seems to get less attention than it is due. Congratulations to the chaps at the OSAF on getting the 1.0 release out. It’s a great product.

We’ve been using this calendar server for quite a while now and without any incidents, failures or operational problems. I shall probably upgrade it to the 1.0 in a short while, but seeing how reliable our 0.13svn system has been I’m a bit reticent – you know the old adage; “if it ain’t broke, don’t fix it!”.

So, what’s a calendar server then?

Think of google calendar or something similar that allows you run multiple calendars and decide who gets to see what bits of your life story.

Cosmo is one of these. It supports various communications methods including the public IETF standard CalDAV protocol to talk to calendaring clients (iCal, Sunbird, Lightning…) and it also has a built-in web interface so you can access your calendar when away from your desk/laptop computers.

The neat thing about the way Cosmo works is the way you manage and publish your separate calendars (called collections). You issue tickets that can be everlasting or time-limited and can provide full read/write access, read-only access or just show free/busy status, and you can send these tickets to as many people as you like – no need for creating accounts and passwords for the recipients.

Here’s a screenshot of Mozilla Thunderbird using the Lightning (calendaring) plugin. All the data is resident on our Chandler calendar server and is accessed via the CalDAV protocol.

The list down the left shows the various calendar collections to which I have access and the main screen shows all the events and tasks color coded for the month of June.

Here’s a similar shot but of the Cosmo UI in a web browser.

Cosmo is a Java application that runs in a Tomcat server. We have ours running on my little low power VIA C7N server and it has been running happily for a year or so with no interruption to service.

Interestingly, Google has just made available a CalDAV interface to their calendar system too. It is a bit rough around the edges currently and is only supposed to support Apple’s iCal client but thanks to a comment from Roberto via the cosmo mailing list, I made a brief test with Lightning using CalDAV and it appears to work O.K. But don’t take my word for it: in Sunbird or Lightning, use the following URL to talk to your Google calendar:

https://www.google.com/calendar/dav/UserName@gmail.com/events

It’s alright, although managing multiple collections, or calendars, with Google is no where near as easy as it is using Cosmo. But being able to now collate all your calendars into your desktop with Lightning and CalDAV is great!