How to install a Squid & Dansguardian content filter on Ubuntu Server

Being a family man and a geek, our household has both children and lots of tech; there are 6 or so computers, various tablets, smartphones and other devices capable of connecting to, and displaying content from, the Internet.

For a while now I’ve wanted to provide a degree of content filtering on our network to prevent accidental, or deliberate, access to some of the worst things the Internet has to offer. What I didn’t want to do however was blindly hand control of this very important job to my ISP (as our beloved leader would like us all to do). Also, I absolutely believe this is one of my responsibilities as a parent; it is not anyone else’s. In addition, there are several problems I have with our government’s chosen approach:

- Filtering at the ISP network-side means the ISP must try and inspect all my internet traffic all of the time (what else could they potentially do with this information I wonder?)

- If the ISP’s filter prevents access to content which we feel that our kids should be able access, how can I change that? Essentially I can’t.

- I reckon that most kids of mid-teenage years will have worked out ways to bypass these filters anyway (see footnote) leaving more naive parents in blissful ignorance; thinking their kids are protected when in fact they are not.

With the above in mind I set about thinking how I could provide a degree of security on our home network using tried and trusted Open Source tools…

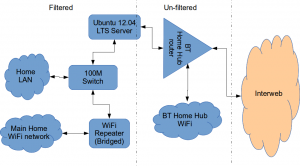

Firstly this is how our networked looked before.

The BT Router is providing the DHCP service in the above diagram.

The Ubuntu 12.04 Server is called vimes (after Commander Vimes in the Discworld novels by Terry Pratchett) and is still running the same hardware that I described way back in 2007! It’s a low power VIA C7 processor, 1G of RAM and it now has a couple of Terabytes of disk. It’s mainly used as a central backup controller and dlna media store/server for the house.

I never did get Untangle working on it, but now it seemed like a good device to use to do some filtering… There are loads of instructions on the Internet about using Squid & Dansguardian but none covered quite what I wanted to achieve: A dhcp serving, bridging, transparent proxy content filter.

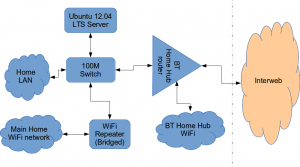

Architecturally, my network needed to look like this:

As you can see above, the physical change is rather negligible. The Ubuntu server now sits between the home LAN and the broadband router rather than as just another network node on the LAN as it was before.

The configuration of the server to provide what I required can be broken down into several steps.

1. Get the Ubuntu server acting as a transparent bridge

This is relatively straightforward. First install the bridge-utils package: sudo apt-get install bridge-utils

Then I made a backup of my /etc/network/interfaces file and replaced it with this one:

# This file describes the network interfaces available on your system # and how to activate them. For more information, see interfaces(5). # The loopback network interface auto lo iface lo inet loopback # Set up interfaces iface eth0 inet manual iface eth1 inet manual # Bridge setup auto br0 iface br0 inet static bridge_ports eth0 eth1 address 192.168.1.2 broadcast 192.168.1.255 netmask 255.255.255.0 gateway 192.168.1.1

Probably the most interesting part of this file is where we assign a static IP address to the bridge itself. Without this I would not be able to connect to this server as both ethernet ports are now just transparent bridge ports so not actually listening for IP traffic at all.

(Obviously you will need to determine the correct IP address scheme for your own network)

2. Disable DHCP on the router and let Ubuntu do it instead

The reason for this is mostly down to the BT Home Hub… For some bizarre reason, BT determined that they should control what DNS servers you can use. Although I’m not using it right now, I might choose to use OpenDNS for example, but I can’t change the DNS addresses served by the BT Home Hub router so the only way I can control this is to turn off DHCP on the router altogether and do it myself.

Install the dhcp server: sudo apt-get install dhcp3-server

Tell the dhcp server to listen for requests on the bridge port we created before by editing the file /etc/default/isc-dchp-server so that the INTERFACES line reads: INTERFACES="br0".

Then edit the dhcp configuration file /etc/dhcp/dhcpd.conf so we allocate the IP addresses we want to our network devices. This is how mine looks:

ddns-update-style none;

default-lease-time 600;

max-lease-time 7200;

# If this DHCP server is the official DHCP server for the local

# network, the authoritative directive should be uncommented.

authoritative;

# Use this to send dhcp log messages to a different log file (you also

# have to hack syslog.conf to complete the redirection).

log-facility local7;

subnet 192.168.1.0 netmask 255.255.255.0 {

range 192.168.1.16 192.168.1.254;

option subnet-mask 255.255.255.0;

option routers 192.168.1.1;

#Google DNS

option domain-name-servers 8.8.8.8, 8.8.4.4;

#OpenDNS

#option domain-name-servers 208.67.222.222, 208.67.220.220;

option broadcast-address 192.168.1.255;

}

There are many options and choices to make regarding setting up your DHCP server. It is extremely flexible; you will probably need to consult the man pages and other on-line resources to determine what is best for you. Mine is very simple. It serves one block of IP addresses within the range 192.168.1.16 to 192.168.1.254 to all devices. Currently I’m using Google’s DNS servers but as you can see I’ve also added OpenDNS as a comment so I can try it later if I want to.

3. Install Squid and get it working as a transparent proxy using IPTables

This bit took a while to get right but, as with most things it seems to me, in the end the actual configuration is fairly straightforward.

Install Squid: sudo apt-get install squid.

Edit the Squid configuration file /etc/squid3/squid.conf… By default this file contains a lot of settings. I made a backup and then reduced it to just those lines that needed changing so it looked like this:

http_port 3128 transparent

acl localnet src 192.168.1.0/24

acl localhost src 127.0.0.1/255.255.255.255

acl CONNECT method CONNECThttp_access allow localnet

http_access allow localhost

always_direct allow allcache_dir aufs /var/spool/squid3 50000 16 256

Probably the most interesting part in the above is the word “transparent” after the proxy port. Essentially this means we do not have to configure every browser on our network: http://en.wikipedia.org/wiki/Proxy_server#Transparent_proxy. The final line of the file is just some instructions to configure where the cache is stored and how big it is. Again, there are tons of options available which the reader will need to find out for themselves…

To actually cause all the traffic on our LAN to go through the proxy rather than just passing through the bridge transparently requires a bit of configuration on the server using ebtables to allow easier configuration of the Linux kernel’s bridge & iptables to redirect particular TCP/IP ports to the proxy.

First I installed ebtables: sudo apt-get install ebtables

My very simplistic understanding of the following command is that it essentially tells the bridge to identify IP traffic for port 80 (http) and pass this up to the kernel’s IP stack for further processing (routing) which we then use iptables to handle.

sudo ebtables -t broute -A BROUTING -p IPv4 --ip-protocol 6 --ip-destination-port 80 -j redirect --redirect-target ACCEPT

Then we tell iptables to forward all port 80 traffic from the bridge to our proxy:

sudo iptables -t nat -A PREROUTING -i br0 -p tcp --dport 80 -j REDIRECT --to-port 3128

Restart Squid: sudo service squid3 restart

At this point http browser traffic should now be passing through your bridge and squid proxy before going on to the router and Internet. You can test to see if it is working by tailing the squid access.log file.

I found that squid seemed to be very slow at this juncture. So I resorted to some google fu and looked for some help on tuning the performance of the system. I came across this post and decided to try the configuration suggestions by adding the following lines to my squid.conf file:

#Performance Tuning Options hosts_file /etc/hosts dns_nameservers 8.8.8.8 8.8.4.4 cache_replacement_policy heap LFUDA cache_swap_low 90 cache_swap_high 95 cache_mem 200MB logfile_rotate 10 memory_pools off maximum_object_size 50 MB maximum_object_size_in_memory 50 KB quick_abort_min 0 KB quick_abort_max 0 KB log_icp_queries off client_db off buffered_logs on half_closed_clients off log_fqdn off

This made an immediate and noticeable difference to the performance; enough so in fact that I haven’t yet bothered to go any further with tuning investigations. Thanks to the author Tony at last.fm for the suggestions.

4. Install Dansguardian and get it filtering content

sudo apt-get install dansguardian is all you need to install the application.

To get it to work with our proxy I needed to make a couple of changes to the configuration file /etc/dansguardian/dansguardian.conf.

First, remove or comment out the line at the top that reads UNCONFIGURED - Please remove this line after configuration I just prefixed it with a #.

Next we need to configure the ports by changing two lines so they look like this:

filterport = 8080

proxyport = 3128

Finally, and I think this is right, we need to set it so that Dansguardian and squid are both running as the same user so edit these two lines:

daemonuser = ‘proxy’

daemongroup = ‘proxy’

As you will see in that file, there are loads of other configuration options for Dansguardian and I will leave it up to the reader to investigate these at their leisure.

One suggestion I came across on my wanderings around the Interwebs was to grab a copy of one of the large collections of blacklisted sites records and install these into /etc/dansguardian/blacklists/. I used the one linked to from the Dansguardian website here http://urlblacklist.com/ which says it is OK to download once for free. As I understand it, having a list of blacklist sites will reduce the need for Dansguardian to parse every url or all content but this shouldn’t be relied on as the only mechanism as obviously the blacklist will get out-of-date pretty quickly.

Dansguardian has configurable lists of “phrases” and “weights” that you can tailor to suit your needs.

Now that’s installed we need to go back and reconfigure one of the iptables rules so that traffic is routed to Dansguardian rather than straight to Squid first and also enable communication between Squid and Dansguardian. You can flush (empty) the existing iptables rules by running iptables -F.

Now re-enter the rules as follows:

sudo iptables -t nat -A PREROUTING -i br0 -p tcp –dport 80 -j REDIRECT –to-port 8080

sudo iptables -t nat -A OUTPUT -p tcp –dport 80 -m owner –uid-owner proxy -j ACCEPT

sudo iptables -t nat -A OUTPUT -p tcp –dport 3128 -m owner –uid-owner proxy -j ACCEPT

sudo iptables -t nat -A OUTPUT -p tcp –dport 3128 -j REDIRECT –to-ports 8080

Restart Squid and Dansguardian: sudo service squid3 restart & sudo service dansguardian restart.

Now if you try to connect to the internet from behind the server your requests should be passed through Dansguardian and Squid automatically. If you try and visit something that is inappropriate your request should be blocked.

If it all seems to be working OK then I suggest making your ebtables and iptables rules permanent so they are restored after a reboot.

This can be achieved easily for iptables by simply running sudo iptables-save.

I followed these very helpful instructions to achieve a similar thing for the ebtables rule.

And that’s it. Try rebooting the server to make sure that it all still works without you having to re-configure everything. Then ask your kids and wife to let you know if things that they want to get to are being blocked. YOU now have the ability to control this – not your ISP… 😀

Footnotes

Be aware that on the network diagrams above the Wifi service provided by the BT Homehub router, and the LAN on the router side of the server, are not protected by these instructions. For me this is fine as the coverage of that Wifi network only makes it as far as the Kitchen anyway. And if it was more visible I could always change the key and only let my wife and I have access.

Also, I should make it clear that I know what I have above is not foolproof. I am completely aware that filtering/monitoring encrypted traffic is virtually impossible and there are plenty of services available that provide ways to circumvent what I have here. But I am also not naive and I reckon that if my kids have understood enough about networking and protocols etc. to be able to use tunnelling proxies or VPN services then they are probably mature enough to decide for themselves what they want to look at.

Of course there are plenty of additional mechanisms one can put in place if desired.

- Time-based filters preventing any Internet access at all at certain times

- Confiscation of Internet connected devices at bedtime

- Placing computers and gaming consoles in public rooms of the house and not in bedrooms

- And many more I’m sure you can think of yourself

As I see it, the point is simply this: As a parent, this is your responsibility…

Asterisk, Zaptel, Oslec and Ubuntu Server [Updated]

I have recently migrated my server at home from a custom Linux build to Ubuntu Server (8.10 Intrepid). The main migration went very smoothly and I learned a few new tricks on the way too.

One function the server performs is as my telephone system for work and home. It runs Asterisk. I have a couple of IAX2 trunks from our VOIP provider for mine and my wife’s businesses and I also have a cheap x100p clone analogue card for PSTN backup purposes. On my old system software, I had compiled the device drivers (zaptel) and kernel modules for the card manually and used a, frankly fantastic, echo canceller called Oslec (the Open Source Line Echo Canceller). You can read the couple of posts I made about when I first tried it out here.

On my new server OS, I installed the Asterisk server via Ubuntu’s package management system sudo apt-get install asterisk. After some digging around on the ‘net (and it wasn’t obvious) I discovered that the zaptel drivers (for the PSTN hardware) need to be installed slightly differently:

sudo m-a -t build zaptel Which retrieves the zaptel package and builds it for your running kernel.m-a prepare in advance of this to retrieve your Linux kernel headers.

The m-a (Module Assistant) command will compile and create a .deb package in the /usr/src directory. On my system the package was called zaptel-modules-2.6.27-11-server_1.4.11~dfsg-2+2.6.27-11.27_i386.deb.

It can then be installed using dpkg: sudo dpkg -i zaptel-modules-2.6.27-11-server_1.4.11~dfsg-2+2.6.27-11.27_i386.deb.

This went fine and I had read on launchpad that as of an earlier version of the zaptel package the Oslec echo canceller was now the default. Unfortunately this didn’t quite work as I expected. The zaptel module was in fact using the standard MG2 EC which is not very good with my x100p card at all.

After a bit more digging around in the source code, there is a file in the zaptel package called zconfig.h which is where the chosen EC is defined. It is specified as MG2 in the package. What I did to fix it was as follows.

Unpack thezaptel.tar.bz2package that was in/usr/src.Edit thekernel/zconfig.hfile so the line#define ECHO_CAN_MG2is commented outand added in a line that reads#define ECHO_CAN_OSLECinstead.Re-assemble the zaptel package:sudo tar jcvf zaptel.tar.bz2 modules(“modules” is the directory name where the zaptel package extracts to).Delete the existing zaptel-blah-blah.deb file and the modules directory too.Re-run them-a -t build zaptelcommand.

Thanks to Tzafir Cohen on the asterisk mailing list for this. There is a far simpler method to use for the time being although this is a known bug and is now fixed in the development tree so I guess it will be unnecessary once the package has been updated. Do please check first if you are following this in the months to come. Anyway, instead of the commands above, these commands work for me and are far simpler:

sudo m-a -f get zaptel-source This simply gets the source package and saves it in /usr/src.

sudo ECHO_CAN_NAME=OSLEC m-a -t a-i zaptel And this builds and installs the modules and tells the build scripts to choose the Oslec EC by default. The -t switch puts the command into text mode so you actually see what is going on. I find the process rather opaque and uninformative without this switch.

After rebuilding, the zaptel module now requires, and loads the Oslec EC by default. The command modinfo zaptel is a good test. The output of it should be something like this:

filename: /lib/modules/2.6.27-11-server/misc/zaptel.ko

version: 1.4.11

license: GPL

description: Zapata Telephony Interface

author: Mark Spencer

srcversion: 4433ADDE0493C798A455677

depends: oslec,crc-ccitt

vermagic: 2.6.27-11-server SMP mod_unload modversions 686

parm: debug:int

parm: deftaps:int

Note the “depends” line.

You could also type lsmod | grep 'zaptel' once you have reloaded your server:

zaptel 199844 5 wcfxo

oslec 16668 1 zaptel

crc_ccitt 10112 1 zaptel

This command shows the oslec ec module installed along with the the zaptel and wcfxo drivers.

One final point to note. If you just want to load a particular telephony hardware driver and not all of them, I think you need a file /etc/default/zaptel like this with the relevant driver(s) uncommented:

TELEPHONY=yes

DEBUG=yes

# Un-comment as per your requirements; modules to load/unload

#Module Name Hardware

#MODULES="$MODULES tor2" # T400P - Quad Span T1 Card

#E400P - Quad Span E1 Card

#MODULES="$MODULES wct4xxp" # TE405P - Quad Span T1/E1 Card (5v version)

# TE410P - Quad Span T1/E1 Card (3.3v version)

#wct4xxp_ARGS="t1e1override=15" # Additional parameters for TE4xxP driver

#MODULES="$MODULES wct1xxp" # T100P - Single Span T1 Card

# E100P - Single Span E1 Card

#MODULES="$MODULES wcte11xp" # TE110P - Single Span T1/E1 Card

#MODULES="$MODULES wctdm24xxp" # TDM2400P - Modular FXS/FXO interface (1-24 ports)

MODULES="$MODULES wcfxo" # X100P - Single port FXO interface

# X101P - Single port FXO interface

#MODULES="$MODULES wctdm" # TDM400P - Modular FXS/FXO interface (1-4 ports)

I can’t recall the exact origins of this file and whether or not it is really necessary, but I had it on my old system and the Ubuntu provided zaptel init script checks for it’s presence; although it doesn’t look like it does much with its contents though…

Hopefully this will help others and also act as a bit of an aide memoir for me when I next build an Ubuntu server with Asterisk.

A shared “drop-box” using Samba [Updated]

Here’s a neat thing I managed to sort out the other day.

If you have read any of the “Untangle, Asterisk and File Server; All-in-One” series of posts before, then you will know that I’ve got a neat little VIA CN700 server for our home that is running all sorts of good stuff.

One of the things I have wanted to do for a while was to create a shared directory on the server so any family member can put stuff in there (like music files etc) but not be able to delete anything so as to prevent accidentally removing thousands of MP3s or irreplaceable digital pictures for example. This facility is apparently called a “drop-box”.

Hmmmm. Now let me think… Linux file permissions are rwx: Read Write eXecute. So, if you have write access, you can delete too. How can I fix this?

After some Googling and reading the Samba documentation it is actually pretty straightforward. Here’s how to make a drop-box on a Linux file server using Samba (CIFS) as the file sharing protocol and access mechanism.

- Create a directory somewhere on your server and give it a sensible name: I called it “shared” and put it under the

/hometree. - Create a Linux group for all users who you want to access the drop-box: I called the group “shared”. Then add your users to that group.

- Using sudo or running as root, change the the directory settings as follows:

chmod 770 shared. This prevents access to the directory by anyone other than root, and the owner and group members.chown nobody:shared shared. This changes the directory ownership to a user “nobody” and the group “shared”. It is important that you use a user who is NOT a member of the shared group. Any user will do, but it must be defined in/etc/passwd. I chose “nobody” as it has very minimal permissions and is unlikely to pose any sort of security hazard. On my server, the user nobody is configured thus:nobody:x:99:99:Unprivileged User:/dev/null:/bin/falsechmod g+s shared/. This sets the directory’s SGID bit so that any new files or directories created in our shared directory will have their group id set to that of the of the shared directory. This ensures all members of the shared group can read the contents.chmod +t sharedThis sets the “sticky bit” of our shared directory. On Linux, setting the sticky bit, means items inside the directory can be renamed or deleted only by the item’s owner, the directory’s owner, or the superuser; without the sticky bit set, any user with write and execute permissions for the directory can rename or delete contained files, regardless of owner.- Here’s a listing of the directory showing how it should look now:

drwxrws--T 3 nobody shared 62 2008-04-15 21:48 shared

Now we can set-up our share in Samba as follows:

[shared]

comment = Our Shared Data/Media

path = /home/shared/

read only = no

valid users = @shared

browseable = yes

inherit owner = yes

The valid users @shared line tells samba that only members of the “shared” group can access this share. And the line inherit owner = yes is what makes it all work. This tells samba to set the owner of any files created to the owner of the directory we are in. In this case the owner is “nobody”. As the sticky bit is set on this directory, only the user “nobody” or the superuser can remove files as their ownership is instantly changed by Samba when first created from the actual user to the user “nobody”.

After dropping a file into the shared directory over a samba connection the listing looks like this:

-rwxr--r-- 1 nobody shared 1272366 2008-04-17 14:17 14_-_Jubilee.mp3.

See how the file is owned by “nobody:shared” and only has group and other read set.

It might sound like a bit of a palaver, but it doesn’t take very long to set up. This is a very useful way of creating drop-boxes for many kinds of applications.

I hope someone finds this useful, and please leave a comment if you do!

[UPDATE]

A big thanks to Simbul who noted the obvious flaw in my suggestion. Although you could safely drop files into this folder, you couldn’t create directories which was a bit of a PITA to be honest. However Simbul made a simple addition to the [shared] section that fixes this issue (See the comments at the bottom of this post for details):

[shared]

comment = Our Shared Data/Media

path = /home/shared/

read only = no

valid users = @shared

browseable = yes

inherit owner = yes

Add the following two lines:

directory mode = 3770

force directory mode = 3770

And that’s it. Thanks Simbul. It works a treat.

Pet Project

I’ve been meaning to write about this for a while now. But what with the flu, Microshaft’s ongoing corruption of the ISO and some other stuff cropping up, I just kept finding reasons to put it off. Finally however, after jotting some notes and so forth for a few days I’ve managed to get my act together.

A Pet [Open Source] Project I want to give some airtime to, and get some assistance for, is Linux From Scratch.

History

Going back into the dark ages, I had been “playing” with one Linux distribution or other from the cover of PC magazines for sometime (I think it was a very early Mandrake or Suse product that first grabbed me), and found the whole system quite fascinating. The fact it was all free, and you could “LOOK” into it and see how it all worked together was a real eye opener. I was used to PCs and other computer platforms so it wasn’t all new… I grew up with VT100 terminals, DEC VAX VMS and then DOS so command line interpreters and such were nothing new in themselves but to get a complete OS, that did stuff, was free, and actually encouraged you to examine it, I remember it making me sit up and take notice even then.

One of the very first Open Source communities I came into active contact with was the Linux From Scratch (LFS for short) community. I cannot remember how I stumbled across the project or quite how long ago either, but it was quite a few years certainly. They have a feature which encourages newcomers to register their first LFS build when it is up and running. Checking on their website today, they have 19570 users registered so far. My LFS ID is 216 and the version of “the book” I recorded as having followed was 2.4.x when I registered. Although I certainly built (tried to build) a few before getting brave enough to register 😉 Anyway, I’m guessing this would have been around 1999/2000 some time.

LFS is still a project I follow closely and have a very warm opinion of. It has taught me a great deal over the years.

What is it then?

The project – if you can’t guess from the name – is all about building a functional Linux based operating system from scratch. That is, from nothing. You start with a spare partition on your hard disk and, by following the book, you learn what makes up a GNU/Linux operating system, how that operating system works and why bits of it behave the way they do. It is an educational project and it is a brilliant educational project. You gain knowledge of not just Linux itself but, Bash, compiling, device management and much, much more too. And what you also learn is what makes it all tick together… It is quite hard to explain but it’s a bit like the whole being worth more than just a simple sum of the individual parts.

LFS was started by a chap called Gerard Beekmans. The LFS project’s homepage explains the project thus:

What is Linux From Scratch?

Linux From Scratch (LFS) is a project that provides you with step-by-step instructions for building your own customized Linux system entirely from source.

Why would I want an LFS system?

Many wonder why they should go through the hassle of building a Linux system from scratch when they could just download an existing Linux distribution. However, there are several benefits of building LFS. Consider the following:

LFS teaches people how a Linux system works internally

Building LFS teaches you about all that makes Linux tick, how things work together and depend on each other. And most importantly, how to customize it to your own tastes and needs.Building LFS produces a very compact Linux system

When you install a regular distribution, you often end up installing a lot of programs that you would probably never use. They’re just sitting there taking up (precious) disk space. It’s not hard to get an LFS system installed under 100 MB. Does that still sound like a lot? A few of us have been working on creating a very small embedded LFS system. We installed a system that was just enough to run the Apache web server; total disk space usage was approximately 8 MB. With further stripping, that can be brought down to 5 MB or less. Try that with a regular distribution.LFS is extremely flexible

Building LFS could be compared to a finished house. LFS will give you the skeleton of a house, but it’s up to you to install plumbing, electrical outlets, kitchen, bath, wallpaper, etc. You have the ability to turn it into whatever type of system you need it to be, customized completely for you.LFS offers you added security

You will compile the entire system from source, thus allowing you to audit everything, if you wish to do so, and apply all the security patches you want or need to apply. You don’t have to wait for someone else to provide a new binary package that (hopefully) fixes a security hole. Often, you never truly know whether a security hole is fixed or not unless you do it yourself.

Why LFS is a great platform

[When I discuss LFS I also imply the use of BLFS (Beyond Linux From Scratch) which is a fantastic resource for how to build and install the stuff that goes to make up a “useful” and “complete” Operating System.]

As some of the readers here will know, the little server I’ve built for home use is running LFS. It also runs, Apache, Tomcat, MySQL, PHP, Postgresql, is a Mail server, a Samba (Windows Networking) server, is our telephone exchange (running Asterisk) and a few other things too.

One of the main reasons for choosing LFS as the platform for this server is this: as it is built entirely from scratch there is no bloat, or unnecessary applications, the system is about as lean as you can get. The hardware I chose (very deliberately) is not the most powerful in the world; a mere 7Watts power consumption. But the applications running on the server currently seem very happy and there are plenty of system resources spare. This would be very hard to achieve using a mainstream distribution as they have to cater for as generic a host platform as possible and include a huge amount of features and supporting applications that are largely superfluous for a custom-built and tailored system.

Why LFS is not a great platform

LFS is not perfect however. The hurdle that causes most LFS users eventually to fall down and revert to a mainstream distribution is that of long-term maintenance of the LFS system. There is, by default, no concept of a package management system. When you install an application, you download the source code, and build the executable binaries and libraries and install them on your system. If there is a “dependency” issue like a missing library or something, this must be installed first before you can continue. In most respects this isn’t such a bad thing, but if you want to try some new app out it can involve building a great deal of software that you may realise, afterwards you don’t really want. Removing the unwanted can be a PITA.

My Desktop OS is Ubuntu. It works, and is very easy to upgrade and manage.

What happens next then?

In a few recent weeks, there has been a great flood of discussion and debate on the LFS mailing lists. The original thread for this debate, started by a long term LFS editor called Jeremy Huntwork, has sown the seed for a process to review what LFS is all about and how it could be taken forward whilst still maintaining the core principle of being an Educational Project first and foremost.

One area where I feel the project’s new direction and strategy could really benefit is from some “new blood” with few pre-conceived ideas or historical baggage.

If you use Linux, don’t really know what is going under the hood but want to, then please visit the LFS website, download or read on-line the current book and start working your way through it. Join the mailing lists (either directly or go through gmane and your favourite newsreader), and please contribute your views and experiences.

We really want to give LFS a new lease of life and that, IMHO, needs some fresh ideas and thinking too.

Open Source Cars and More

I love this article on zdnet from David Greenfield. It’s a round-up of what’s happening in the up and coming area of Open Source Hardware. According to David,

A burgeoning trend in open source hardware is putting up some devices on the Web — from machines that make anything (including themselves) to cars — with the specs to make them yourself (See our list below). While still in its infancy, the trend could redefine hardware cost models much as its done for software.

And there are some neat really ideas like this one which I have been following myself for a while:

Now that you’ve got Asterisk, what hardware platform will you run the software on? Usually folk settle on a Intel or AMD based-server of one kind or another. You can build your own PBX hardware with the Astfin Project or buy one for just $450 from the Free Telephony Project store.

This Asterisk appliance project has the chap who wrote the brilliant Open Source Echo Canceller I mentioned before in it.

But how about your own, Open Source Car…

Open Source isn’t just for your office. The OScar aims to be the first open source automobile. The goal is to create a utilitarian car that aims to move people from place-to-place sans a lot of the high-tech gadgetry that runs in today cards. Initial concepts call for a four-door, four meter length vehicle weighing about 1000 Kilo capable of reaching 145 KM/hour.

Cool – just the thing to keep a man happy and content in his shed for months. 😉

Remote Firefox over X/SSH

Here’s a quick tip…

I was trying to get a Firefox session running over an SSH connection between my desktop PC (Ubuntu 7.10) and the little server I’m building. The strange thing was, every time I typed firefox & at the command line prompt, it started Firefox all right; but it started a local (Ubuntu) instance of it with my local profile settings! One of the reasons I wanted to run a remote browser was so I could download files directly to that machine and so I could access some html docs on that box; as it is now headless.

A bit of Googling led me here, where the author used this command ( export MOZ_NO_REMOTE=1; firefox -profilemanager ) &. After a bit of experimentation, and more Googling, for my purposes it can be simplified to this:

firefox -no-remote &

This assumes Firefox version 2 and that your SSH connection was made using ssh -X uname@host

Hope this helps someone else. It got me foxed for ages initially…